Published on Jan 09, 2026

Flexpad is an interactive system that combines a depth camera and a projector to transform sheets of plain paper or foam into flexible, highly deformable, and spatially aware handheld displays. We present a novel approach for tracking deformed surfaces from depth images in real time. It captures deformations in high detail, is very robust to occlusions created by the user’s hands and fingers, and does not require any kind of markers or visible texture.

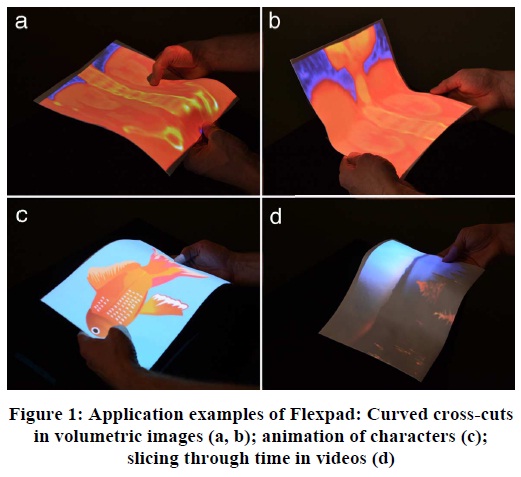

As a result, the display is considerably more deformable than in previous work on flexible handheld displays, enabling novel applications that leverage the high expressiveness of detailed deformation. We illustrate these unique capabilities through three application examples: curved cross-cuts in volumetric images, deforming virtual paper characters, and slicing through time in videos. Results from two user studies show that our system is capable of detecting complex deformations and that users are able to perform them quickly and precisely.

Projecting visual interfaces onto movable real-world objects has been an ongoing area of research, e.g. By closely integrating physical and digital information spaces, they leverage people’s intuitive understanding of how to manipulate real-world objects for interaction with computer systems. Based on inexpensive depth sensors, a stream of recent research presented elegant trackingprojection approaches for transforming real-world objects into displays, without requiring any instrumentation of these objects. None of these approaches, however, interpret the deformation of flexible objects.

Flexible deformation can expand the potential of projected interfaces. Deformation of everyday objects allows for a surprisingly rich set of interaction possibilities, involving many degrees of freedom, yet with very intuitive interaction: people bend pages in books, squeeze balls, model clay, and fold origami, to cite only a few examples. Adding deformation as another degree of freedom to handheld displays has great potential to add to the richness and expressiveness of interaction. We present Flexpad, a system that supports highly flexible bending interactions for projected handheld displays. A Kinect depth camera and a projector form a cameraprojection unit that lets people use blank sheets of paper, foam or acrylic of different sizes and shapes as flexible displays.

Flexpad has two main technical contributions: first, we contribute an algorithm for capturing even complex deformations in high detail and in real time. It does not require any instrumentation of the deformable handheld material. Hence, unlike in previous work that requires markers, visible texture, or embedded electronics, virtually any sheet at hand can be used as a deformable projection surface for interactive applications. Second, we contribute a novel robust method for detecting hands and fingers with a Kinect camera using optical analysis of the surface material.

This is crucial for robust capturing of deformable surfaces in realistic conditions, where the user occludes significant parts of the surface while deforming it. Since the solution is inexpensive, requiring only standard hardware components, it can be envisioned that deformable handheld displays become widespread and common. The highly flexible handheld display creates unique novel application possibilities. Prior work on immobile flexible displays has shown the effectiveness of highly detailed deformations, e.g. for the purposes of 2.5D modeling and multi-dimensional navigation. Yet, the existing inventory of interactions with flexible handheld displays is restricted to only low detail deformations, mostly due to the very limited flexibility of the displays and limited resolution of deformation capturing. Flexpad significantly extends the existing inventory by adding highly flexible and multi-dimensional deformations.

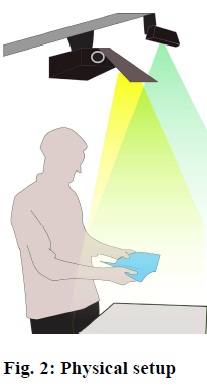

Flexpad enables users to interact with highly flexible projected displays in interactive real-time applications. The setup consists of a Kinect camera, a projector, and a sheet of paper, foam or acrylic that is used as projection surface. Our current setup is illustrated in Fig. 2. A Kinect camera and a full HD projector (ViewSonic Pro 8200) are mounted to the ceiling above the user, next to each other, and are calibrated to define one coordinate space. The setup creates an interaction volume of approximately 110 x 55 x 43 cm within which users can freely move, rotate, and deform the projection surface. The average resolution of the projection is 54 dpi. The user can either be seated at a table or standing. The table surface is located at a distance of 160 cm from the camera and the projector.

Our implementation uses a standard desktop PC with an Intel i7 processor, 8 GB RAM, and an AMD Radeon HD 6800 graphics engine. While we currently use a fixed setup, the Kinect camera could be shoulder- worn with a mobile projector attached to it, as presented in, allowing for mobile or nomadic use. The key contribution of Flexpad is in its approach for markerless capture of a high-resolution 3D model of a deformable surface, including its pose and deformation (see section about the model below). The system is further capable of grabbing 2D image contents from any window in Microsoft Windows and projecting it correctly warped onto the deformable display. Alternatively, it can use 3D volume data as input for the projection. The detailed model of deformation enables novel applications that make use of varied deformations of the display.

Sheets of many different sizes and materials can be used as passive displays, including standard office paper. In our experiments, we used letter-sized sheets of two materials that have demonstrated good haptic characteristics: The fully flexible display is 2 mm thick white foam (Darice Foamies crafting foam). It allows the user to change between different deformations very easily and quickly. The bending stiffness index Sb* of this material was identified as 1.12 Nm7/kg3, which is comparable to the stiffness of 160g/m2 paper. (Sb* = Sb/w3 is the bending stiffness normalized w.r.t. the sheet’s basis weight w in kg/m2 [27].) The display’s weight is 12 grams.

The shape-retaining display retains its deformation even when the user is not holding it. It consists of 2 mm thick white foam with Amalog 1/16” armature wire attached to its backside. It allows for more detailed modeling of the deformation, for easy modifications, as well as for easy holding. To characterize its deformability, we measured the minimal force required to permanently deform the material. We affixed the sheet at one side; a torque as small as 0.1Nm is enough to permanently deform the material, which shows that the material is very easy to deform. The sheets’ weight is 25g.

Existing image based tracking algorithms of deformable surfaces [36, 6, 14] rely on visible features. In this section, we present an approach that requires only image data from a Kinect depth sensor. This allows for tracking an entirely blank (or arbitrarily textured) deformable projection surface in high detail. Moreover, since the depth sensor operates in the infrared spectrum, it does not interfere with the visible projection, no matter what content is projected. A challenge of depth data is that it does not provide reliable image cues on local movement, which renders traditional tracking methods useless.

The solution to this problem is a more global, model-based approach: We introduce a parameterized, deformable object model that is fit into the depth image data by a semi-global search algorithm, accounting for occlusion by the user’s hands and fingers. In the following, we explain

1) the removal of hands and fingers from the input data,

2) the global deformation model and

3) how a global optimization method is used to fit the deformation model to the input data in real time.

While the user is interacting with the display, hands and fingers partially occlude the surface in the depth image. For a robust deformation tracking, it is important to classify these image pixels as not belonging to the deformable surface, so the model is not fit into wrong depth values. The proposed tracking method is able to handle missing data, so the solution lies in removing those occluded parts from the input images in a preprocessing step. Due to the low resolution of the Kinect depth sensor it is difficult to perform a shape-based classification of fingers and hands at larger distances.

In particular, when the finger or the flat hand is touching the surface, the resolution is insufficient to differentiate it reliably from the underlying surface. Existing approaches use heuristics that work reliably as long as the back of the hand is kept at some distance above the surface. These restrictions do not hold in our more general case, where people use their hands and fingers right on the surface for deforming it.

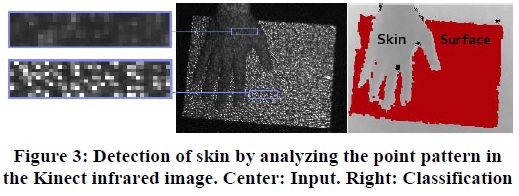

We introduce optical surface material analysis as a novel method of distinguishing between skin and the projection surface using the Kinect sensor. It is based on the observation that different materials have different reflectivity and translucency properties. Some materials, e.g. skin, have translucency properties leading to subsurface scattering of light. This property causes the point pattern, which is projected by the Kinect, to blur. This reduces the image gradients in the raw infrared image, which is given by the Freenect driver (see Fig. 3).

The display surface, made out of paper, cardboard, foam or any other material with highly diffuse reflection, varies from skin in reflectivity as well as translucency; so low peak values or low gradient values provide a stable scheme to classify non-display areas. The projector inside the Kinect has a constant intensity and the infrared camera has a constant shutter time and aperture. Hence, the brightness of the point pattern is constant for constant material properties and object distances and angles. As a consequence, the surface reflectivity of an object with diffuse material can be determined by looking at the local point brightness.

However, because the brightness in the infrared image decreases with increasing distance, the local gradients and peak values have to be regarded with respect to the depth value of the corresponding pixel in the depth image. Pixels that cannot be associated to a dot of the pattern are not classified directly, but receive the same classification as the surrounding pixels by iterative filtering. Results from an evaluation, reported below, show that this classification scheme works reliably at distances up to 1.50 m from the camera.

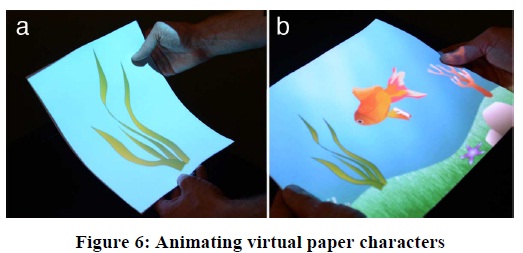

To demonstrate the wide applicability of highly deformable handheld displays, we next present an application for children that leverages deformation as a simple and intuitive means for animating paper characters in an interactive picture. By deforming the display and moving it in space (Fig. 1c and 6a), the creature becomes animated. High-resolution deformation allows very individualized and varied animation patterns. Once animated, the creature is released into the virtual world of the animated picture (Fig. 6 b). For example, fish may move with different speeds and move their fins.

A sea star may lift some of its tentacles. A sea worm may creep on the ground. A flatfish may meander with sophisticated wave-form movements and seaweed may slowly bend in the water. Such rich deformation capabilities can be easily combined with concepts from previous research on animating paper cut-outs [4] that address skeleton animation, eye movement, and scene lighting. Also 3D models could get animated by incorporating as-rigid-as-possible deformation [33]. It is worth noting that our concept can be readily deployed with standard hardware.

A logical extension of Flexpad is touch input. With respect to this question, future work should address several challenges. Technically, this involves developing solutions for Kinect sensors to detect touch input reliably on real-time deformable surfaces. On a conceptual level and building on Dijkstra et al.’s recent work [9], this also involves an understanding of where users hold the display and where they deform it. This is required to identify areas that are reachable for touch input and to inform techniques that differentiate between touches stemming from desired touch input and false positives that result from the user touching the display while deforming it.

An informal analysis from our study data showed that users made single deformations almost exclusively by touching the display close to the edges and on its backside. This also held true for dual deformations with the fully flexible display material. This suggests that touches in the center area of the display can be reliably interpreted as desired touch input. In contrast, the participants touched all over the display for dual deformations with the shape-retaining material. A simple spatial differentiation is not sufficient in this case; more advanced techniques need to be developed, for instance based on the shape of the touch point or on the normal force involved.

1. Alexander, J., Lucero, A. and Subramanian, S. Tilt displays: designing display surfaces with multi-axis tilting and actuation. In Proc. MobileHCI’12, ACM Press, 2012.

2. Balakrishnan, R., Fitzmaurice, G., Kurtenbach, G. and Singh K. Exploring interactive curve and surface manipulation using a bend and twist sensitive input strip. In Proc. I3D ’99, 1999.

3. Bandyopadhyay, D., Raskar, R. and Fuchs, H. Dynamic shader lamps: Painting on movable objects. In Intl. Symp. Augmented Reality, 2001.

4. Barnes C., Jacobs D., Sanders, J. and Goldman B. D., Rusinkiewicz, S., Finkelstein A. and Agrawala, M. Video puppetry: a performative interface for cutout animation. In Proc. SIGGRAPH Asia’08, ACM Press, 2008.

5. Benko, H, Jota R., and Wilson, A. MirageTable: freehand interaction on a projected augmented reality tabletop. In Proc. CHI ’12, ACM Press, 2012.

| Are you interested in this topic.Then mail to us immediately to get the full report.

email :- contactv2@gmail.com |