Published on Nov 30, 2023

The present paper introduces a method to authenticate people from their physiological activity, concretely the combination of ECG and EEG data. We call this system STARFAST (STAR Fast Authentication bio-Scanner Test). Several biometric modalities are already being exploited commercially for person authentication: voice recognition, face recognition and fingerprint recognition are among the more common modalities nowadays.

But other types of biometrics are being studied as well: ADN analysis, keystroke, gait, palm print, ear shape, hand geometry, vein patterns, iris, retina and written signature

Although these different techniques for authentication exist nowadays, they present some problems. Typical biometric traits, such as fingerprint, voice and retina, are not universal, and can be subject to physical damage (dry skin, scars, loss of voice...). In fact, it is estimated that 2-3% of the population is missing the feature that is required for authentication, or that the provided biometric sample is of poor quality. Furthermore, these systems are subject of attacks such as presenting a registered deceased person, dismembered body part or introduction of fake biometric samples. It also compares the performance of the system with the Active Two system from Biosemi.

The Active Two system is state of the art commercial equipment that is proven to have very good performance and has been used in many studies. So far 3 demonstration applications have been developed using the system. EOG based Human Computer Interface (HCI), EEG and ECG based Biometry for Authentication (presented at pHealth as a poster) and an EEG based Sleepiness Prediction system for drivers. In all cases the applications are designed around the same 4 channel system. ENOBIO has been developed with the help of the SENSATION FP6 IP 507231.

With the evolution of m-Health, an increasing number of biomedical sensors will be worn on or implanted in an a individual in the future for the monitoring, diagnosis, and treatment of diseases. For the optimization of resources, it is therefore necessary to investigate how to interconnect these sensors in a wireless body area network, wherein security of private data transmission is always a major concern. This paper proposes a novel solution to tackle the problem of entity authentication in body area sensor network (BASN) for m-Health. Physiological signals detected by biomedical sensors have dual functions:

1) for a specific medical application, and

2) for sensors in the same BASN to recognize each other by biometrics.

A feasibility study of proposed entity authentication scheme was carried out on 12 healthy individuals, each with 2 channels of photoplethysmogram (PPG) captured simultaneously at different parts of the body. The beat-to-beat heartbeat interval is used as a biometric characteristic to generate identity of the individual. The results of statistical analysis suggest that it is a possible biometric feature for the entity authentication of BASN. Advantages Since every living and functional person has a recordable EEG/ECG signal, the EEG/ECG feature is universal.

Moreover brain or heart damage is something that rarely occurs, so it seems to be quite invariant across time. Finally it seems very difficult to fake an EEG/ECG signature or to attack an EEG/ECG biometric system. An ideal biometric system should present the following characteristics: 100% reliability, user friendliness, fast operation and low cost. The perfect biometric trait should have the following characteristics: very low intra-subject variability, very high inter-subject variability, very high stability over time and universality. In the next section we show the general architecture and the global performance of the system we have developed.

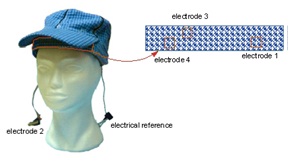

Tree different applications have been built on ENOBIO system. In this section we make a fast overview of them. A EOG based Human Computer Interface. Star lab developed a system to track eye movements. When we look at a scenario, we focus our objects of interest changing our view with fast eye movements. These movements are detected by the system and their amplitude And direction extracted from the EOG signal. The signal is acquired by ENOBIO and sent wirelessly to the ENOBIO server installed in a laptop. This raw signal can be sent via TCP/IP to any application, in that case to the EOG HCI system.

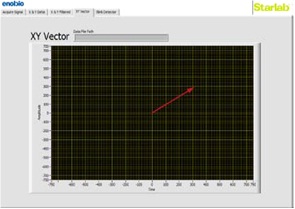

Figure 4: EOG-HMI system. 3 electrodes are placed on the forehead; the 4th one and the electrical reference are placed in the ear lobes with a clip. Figure 7 shows a graphical representation of the output of a movement.

Figure 5: The vector of the picture represents the eye gaze movement of a user of the EOG Human Computer Interface. The absolute error in the prediction for the movements is 5, 9 % with a standard deviation of 6.6 for the X axe, and 9, 9% with a standard deviation of 13, 1 for the Y axe. Then the fusion module [22] provides a final decision about the subject authentication. In order to test the performance of our system we use 48 legal situations (when a subject claims to be himself), 350 impostor situations (when an enrolled subject claims to be another subject from the database) and 16 intruder situations (when a subject who is not enrolled in the system claims to be a subject belonging to the database).

Once the EEG and the ECG biometrics results are fused, using a complex boundary decision (dotted line 2 in fig. 3), we can obtain an ideal performance, that is True Acceptance Rate (TAR) = 100% and False Acceptance Rate (FAR) = 0%. If a linear boundary decision is used (line 1 in fig. 3), we obtain a TAR = 97.9% and a FAR = 0.82%. The results are summarized in table I. Bidimensional decision space. An ordinate represent the ECG probabilities and abscises the EEG probabilities. Red crosses represent impostor/intruder cases and green crosses represent legal cases. Two decision functions are represented this system has been tested as well to validate the initial state of users, and has been proved sensitive enough to detect it. If a subject has suffered from sleep deprivation [28], alcohol intake [26, 27] or drug ingestion when passing an authentication test, the authentication performance decreases. This fact provides evidence that such a system is able to detect not only the identity of a subject but his state as well.

These results show that the authentication of people from physiologic data can be achieved using techniques of machine learning. Concretely it shows that the fusion of two (or more) independent biometric modules increases the performance of the system by applying a fusion stage after obtaining the biometric scores. This result shows that processing the different physiological modalities separately on different processing modules, and introducing a data fusion step, the resulting performance can be increased. Applying a very similar approach, we could easily adapt the system to do emotion recognition [29, 30, and 34] from physiological data, or develop a Brain Computer Interface, just starting from different ground truth data.

From our point of view, this easy to extend feature of our system is the more interesting part of our study along with the ‘personal classifier’ approach which improves considerably the performance of the system. The system described could have different applications for Virtual Reality. It can validate in a continuous way that the person supposed to be tele-present in an audiovisual interactive space is actually the person that is supposed to be. This could facilitate the personalization of the reaction of the virtual environment [31], or secure interactions that guarantee the authenticity of the person behind. Although the system described here was oriented towards person identification, its performance has been significantly modified by introducing physiological data obtained in altered states such as the ones resulting from sleep deprivation.

This indicates that such a system could be used to extract dynamically changing, such as physiological activity related to mood or to intense cognitive activity. For example, it could be used to extract the features described (sleep deprivation, alcohol intake), but also information about basic emotions (see, for example, [34]). The dynamic extraction of such features could be used to evaluate the response of people in virtual environments, as well as adapt the behavior of such environments to the information extracted dynamically.

| Are you interested in this topic.Then mail to us immediately to get the full report.

email :- contactv2@gmail.com |