Published on Nov 30, 2023

This paper describes a methodology for gesture control of a custom developed mobile robot, using body gestures and Microsoft Kinect sensor. The Microsoft Kinect sensor’s ability is to track joint positions has been used in order to develop software application gestures recognition and their mapping into control commands. The proposed methodology has been experimentally evaluated. The results of the experimental evaluation, presented in the paper, showed that the proposed methodology is accurate and reliable and it could be used for mobile robot control in practical applications

With the development of technology, robots are gradually entering our life. The applications are ranging from rehabilitation, assisted living, education, housework assistance , to warfare applications. Various applications require specific control strategies and controllers. Development of a myriad of low-cost sensing devices, even nowadays, makes remote control of robotics devices a topic of interest among researchers. In particular, gesture control of robotic devices with different complexity and degrees-of-freedom is still considered as a challenging tas. In this context, recently developed depth sensors, like Kinect sensor, have provided new opportunities for human-robot interaction. Kinect can recognize different gestures performed by a human operator, thus simplifying the interaction process. In this way, robots can be controlled easily and naturally. The key enabling technology is human body language understanding. The computer must first understand what a user is doing before it can respond. The concept of gesture control to manipulate robots has been used in many research studies. Thanh et al.developed a system in which human user can interact with the robot using body language. They used a semaphore communication method for controlling the iRobot to move up, down, to turn left or right.

Waldherr et al. describe a gesture interface for controlling a mobile robot equipped with a manipulator. They have developed an interface which uses a camera to track a person and recognize gestures involving arm motion. Luo et al. use the Kinect sensor as motion capture device to directly control the arms by using the Cartesian impedance control to follow the human motion. Jacob and Wachs have used hand’s orientation and hand gestures to interact with a robot which uses the recognized pointing direction to define its goal on a map. Kim and Hong have proposed a system intended to support natural interaction with autonomous robots in public places, such as museums and exhibition centers. In this paper we have used Kinect sensor for a real-time mobile robot control. The developed application allows us to control the robot with a predefined set of a body gestures. The operator, standing in front of the Kinect, performs a particular gesture which is recognized by the system. The system then sends commands to the microcontroller Arduino Uno, which operates with the robot.

1. A power wheelchair control system should be developed to improve the living quality of disabled elder.

2. The movement of wheel chair should be controlled completely by the hand gestures.

3. The power wheel chair should be able to moved to and from the location automatically.

4. A touch panel shall be developed to control and move the power wheel chair.

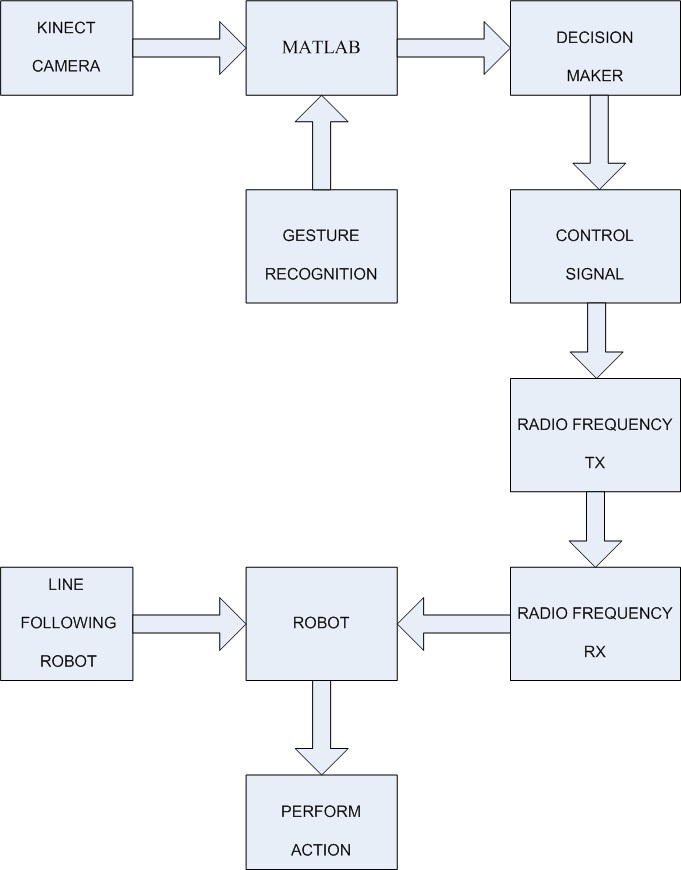

The system is classified into three parts: gesture recognition, Tx-Rx operation, and wheelchair controlling. The main components of the system are power wheelchair prototype, mobile application, Kinect, PC server, IR sensors, and ZigBee interface. Both the Touch panel and ZigBee interface are installed in the wheelchair. The PC server with Kinect interface handles the gesture recognition; identifies the disabled elder location, and plans the moving path from power wheelchair to disabled elder. Once the PC server detects the request, it decides the wheel chair moving path based on the algorithms and then the infrared sensors are activated in accordance to the planned route, and the wheelchair move towards the elder. The wheel chair is built on the technology of a line following robot which follows the line and parks near the patient.

The patient further uses a smartphone application for the navigation of the wheel chair to his desired location and then ensure that he leaves the wheel chair on the line to go back to its parking location through a specific hand gesture sensed by the kinect camera.

Kinect sensor was introduced on the market in 2010 as a line of motion sensing input devices by Microsoft and was intended to be used with Xbox 360 console. It is a peripheral input device composed of several sensors. Namely, it contains a depth sensor, a RGB camera, and four-microphone array. The core component of Kinect is the range camera (originally developed by PrimeSense) which is using an infrared projector and camera and a special microchip to track objects in 3D. So, the sensor provides fullbody 3D motion capture, facial recognition, and voice recognition capabilities. The internal structure (sensor components) and the architecture of Kinect for Windows are presented in Figure 2.

Capabilities of image and depth capturing, audio recording as well as its low cost make Kinect very popular input device for various applications. It allows users natural interaction with the computer and control of applications or games solely using their bodies. This is enabled by identification of the position and orientation of 25 individual joints (including thumbs) and their motion tracking. The angular field of view of the Kinect sensor in the horizontal direction is 57° and in the vertical direction is 43°. It is also equipped with an additional motorized pivot which can tilt the sensor up for an additional 27° either up or down. The sensor can maintain tracking through an extended range of about 0.7–6 m. It can track up to 6 human body skeletons in the working area.

With increasing the aging popularity, the aging society became the inevitable trend in the near future. Thus, how to improve the disabled elder’s living quality becomes a crucial issue. The main problem is that disabled person or patient can’t make the mechanical movements to use the wheel chair. So, the idea of the system is to make disabled person or patient use the wheel chair through only gestures, which reduces their physical burden. The power wheel chair finds a vital application in the movement of the aged and disabled people within the home without the help or assistance of others only using hand gestures.

1. Wheel chair can move towards the disabled person without following the line.

2. The wheel chair movements can be controlled by speech recognition.

3. The range for showing the gestures to the kinect camera can be increased.

4. Several areas that need to be improved are the size of the wheel chair, the cost of the wheel chair, the cost of kinect camera.

The proposed system could be applied in control of robotic wheelchairs that are aimed at disabled persons having functional upper limbs. Moreover, the proposed system could be applied for control of industrial or medical processes where the user could not directly interact with the equipment or apparatus. From user perspective, the system could support more than one user because the Kinect sensor and the developed control application have the possibility to track multiple skeletons in the same time.

1. Koceska, Natasa, Saso Koceski, Francesco Durante, Pierluigi Beomonte, and Terenziano Raparelli. "Control architecture of a 10 DOF lower limbs exoskeleton for gait rehabilitation." International Journal of Advanced Robotic Systems 10 (2012): 1.

2. Koceska, Natasa, and Saso Koceski. "Review: Robot Devices for Gait Rehabilitation." International Journal of Computer Applications 62, no. 13 (2013).

3. Koceski, Saso, and Natasa Koceska. "Evaluation of an Assistive Telepresence Robot for Elderly Healthcare." Journal of Medical Systems 40, no. 5 (2016): 1-7.

4. Serafimov, Kire, Dimitrija Angelkov, Natasa Koceska, and Saso Koceski. "Using mobilephone accelerometer for gestural control of soccer robots." In Embedded Computing (MECO), 2012 Mediterranean Conference on, pp. 140-143. IEEE, 2012.

5. Koceski, Saso, Natasa Koceska, and Ivica Kocev. "Design and evaluation of cell phone pointing interface for robot control." International Journal of Advanced Robotic Systems 9 (2012).