Published on Nov 30, 2023

Data Leakage Detection Project propose data allocation strategies that improve the probability of identifying leakages. In some cases, we can also inject “realistic but fake” data records to further improve our chances of detecting leakage and identifying the guilty party.

In the course of doing business, sometimes sensitive data must be handed over to supposedly trusted third parties. Another enterprise may out source its data processing, so data must be given to various other companies.

There always remains a risk of data getting leaked from the agent. Leakage detection is handled by watermarking, e.g., a unique code is embedded in each distributed copy. If that copy is later discovered in the hands of an unauthorized party, the leaker can be identified. But again it requires code modification. Watermarks can sometimes be destroyed if the data recipient is malicious.

Traditionally, leakage detection is handled by watermarking, e.g., a unique code is embedded in each distributed copy. If that copy is later discovered in the hands of an unauthorized party, the leaker can be identified. Watermarks can be very useful in some cases, but again, involve some modification of the original data. Furthermore, watermarks can sometimes be destroyed if the data recipient is malicious.

In this paper, we study unobtrusive techniques for detecting leakage of a set of objects or records. Specifically, we study the following scenario: After giving a set of objects to agents, the distributor discovers some of those same objects in an unauthorized place. (For example, the data may be found on a website, or may be obtained through a legal discovery process.)

At this point, the distributor can assess the likelihood that the leaked data came from one or more agents, as opposed to having been independently gathered by other means. Using an analogy with cookies stolen from a cookie jar, if we catch Freddie with a single cookie, he can argue that a friend gave him the cookie. But if we catch Freddie with five cookies, it will be much harder for him to argue that his hands were not in the cookie jar. If the distributor sees “enough evidence” that an agent leaked data, he may stop doing business with him, or may initiate legal proceedings. In this paper, we develop a model for assessing the “guilt” of agents.

We also present algorithms for distributing objects to agents, in a way that improves our chances of identifying a leaker. Finally, we also consider the option of adding “fake” objects to the distributed set. Such objects do not correspond to real entities but appear realistic to the agents. In a sense, the fake objects act as a type of watermark for the entire set, without modifying any individual members. If it turns out that an agent was given one or more fake objects that were leaked, then the distributor can be more confident that agent was guilty.

• Our goal is to detect when the distributor’s sensitive data has been leaked by agents, and if possible to identify the agent that leaked the data.

• Perturbation is a very useful technique where the data is modified and made “less sensitive” before being handed to agents. we develop unobtrusive techniques for detecting leakage of a set of objects or records. In this section we develop a model for assessing the “guilt” of agents.

• We also present algorithms for distributing objects to agents, in a way that improves our chances of identifying a leaker.

• Finally, we also consider the option of adding “fake” objects to the distributed set. Such objects do not correspond to real entities but appear realistic to the agents.

• In a sense, the fake objects acts as a type of watermark for the entire set, without modifying any individual members. If it turns out an agent was given one or more fake objects that were leaked, then the distributor can be more confident that agent was guilty.

• If the distributor sees “enough evidence” that an agent leaked data, he may stop doing business with him, or may initiate legal proceedings.

• In this project we develop a model for assessing the “guilt” of agents.

• We also present algorithms for distributing objects to agents, in a way that improves our chances of identifying a leaker.

• Finally, we also consider the option of adding “fake” objects to the distributed set. Such objects do not correspond to real entities but appear.

• If it turns out an agent was given one or more fake objects that were leaked, then the distributor can be more confident that agent was guilty.

NBLC [12] is a routing metric designed for multichannel multiradio multirate WMNs. The NBLC metric is an estimate of the residual bandwidth of the path, taking into account the radio link quality (in terms of data rate and packet loss rate),interference among links, path length, and traffic load on links. The main idea of the NBLC metric is to increase the system throughput by evenly distributing traffic load among channels and among nodes. To achieve the goal of load balancing,nodes have to know the current traffic load on each channel. Thus, each node has to periodically measure the percentage of busy air time rceived on each radio (tuned to a certain channel) and then obtain the percentage of free to-use (residual) air time on each radio.

The minimum hardware required for the development of the project is:

System : Pentium IV 2.4 GHz

Hard Disk : 40 GB

Floppy Drive : 1.44 MB

Monitor :15 VGA colour

RAM : 256 MB

Language : JAVA, JavaScript.

Front End : JSP, Servlet.

Back End : MySQL.

Web server : Apache Tomcat 7.0.

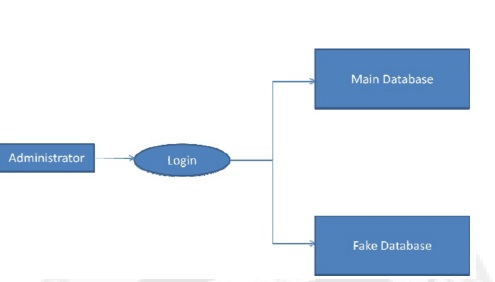

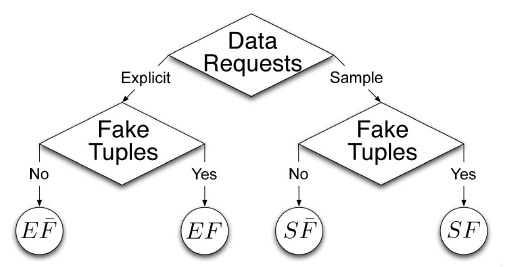

The main focus of our project is the data allocation problem as how can the distributor “intelligently” give data to agents in order to improve the chances of detecting a guilty agent. The main focus of this paper is the data allocation problem: how can the distributor “intelligently” give data to agents in order to improve the chances of detecting a guilty agent. There are four instances of this problem we address, depending on the type of data requests made by agents and whether “fake objects” are allowed. The two types of requests we handle sample and explicit. Fake objects are objects generated by the distributor that are not in set. The objects are designed to look like real objects, and are distributed to agents together with T objects, in order to increase the chances of detecting agents that leak data.

Fake objects are objects generated by the distributor in order to increase the chances of detecting agents that leak data. The distributor may be able to add fake objects to the distributed data in order to improve his effectiveness in detecting guilty agents. Our use of fake objects is inspired by the use of “trace” records in mailing lists. The distributor may be able to add fake objects to the distributed data in order to improve his effectiveness in detecting guilty agents. However, fake objects may impactthe correctness of what agents do, so they may not always be allowable.

The idea of perturbing data to detect leakage is not new, However, in most cases, individual objects are perturbed, e.g., by adding random noise to sensitive salaries, or adding a watermark to an image. In our case, we are perturbing the set of distributor objects by adding fake elements. In some applications, fake objects may cause fewer problems that perturbing real objects.

For example, say that the distributed data objects are medical records and the agents are hospitals. In this case, even small modifications to the records of actual patients may be undesirable. However, the addition of some fake medical records may be acceptable, since no patient matches these records, and hence, no one will ever be treated based on fake records.

The Optimization Module is the distributor’s data allocation to agents has one constraint and one objective. The distributor’s constraint is to satisfy agents’ requests, by providing them with the number of objects they request or with all available objects that satisfy their conditions. His objective is to be able to detect an agent who leaks any portion of his data. The distributor’s data allocation to agents has one constraint and one objective. The distributor’s constraint is to satisfy agents’ requests, by providing them with the number of objects they request or with all available objects that satisfy heir conditions.

His objective is to be able to detect an agent who leaks any portion of his data. We consider the constraint as strict. The distributor may not deny serving an agent request and may not provide agents with different perturbed versions of the same objects. We consider fake object distribution as the only possible constraint relaxation. Our detection objective is ideal and intractable. Detection would be assured only if the distributor gave no data object to any agent. We use instead the following objective: maximize the chances of detecting a guilty agent that leaks all his data objects.

In our project of Data leakage detection, we have presented a resilient Cryptography technique for relational data that embeds Cryptography bits in the data statistics. The Cryptography problem was formulated as a constrained optimization problem that maximizes or minimizes a hiding function based on the bit to be embedded. GA and PS techniques were employed to solve the proposed optimization problem and to handle the constraints. Furthermore, we presented a data partitioning technique that does not depend on special marker tuples to locate the partitions and proved its resilience to Cryptography synchronization errors. We developed an efficient thresholdbased technique for Cryptography detection that is based on an optimal threshold that minimizes the probability of decoding error.

The Cryptography resilience was improved by the repeated embedding of the Cryptography and using majority voting technique in the Cryptography decoding phase. Moreover, the Cryptography resilience was improved by using multiple attributes. A proof of concept implementation of our Cryptography technique was used to conduct experiments using both synthetic and real-world data. A comparison our Cryptography technique with previously posed techniques shows the superiority of our technique to deletion, alteration, and insertion attacks.