Published on Apr 02, 2024

Unmanned Aerial Vehicles (UAVs) are expected to serve as aerial robotic vehicles to perform tasks on their own. Computer vision is applied in UAVs to improve their autonomies both in flight control and perception of environment around them. A survey of researches in such a field is presented.

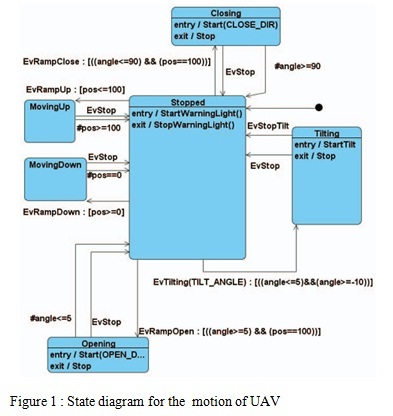

Based on images and videos captured by on-board camera(s), vision measures, such as stereo vision, optical flow fields etc. extract useful features which can be integrated with flight control system to form visual servoing. Aiming at the use of hand gestures for human- computer interaction, this paper presents a novel approach for hand gesture-based control of UAVs. The research was mainly focused on solving some of the most important problems that current HRI (Human-Robot Interaction) systems fight with.

Presenting a simple approach to recognizing gestures through image processing techniques and web cameras, the problem of hand gestures recognition has been addressed using motion detection and algorithm based on histograms, which makes it efficient in unconstrained environments, easy to implement and fast enough. Highly flexible manufacturing (HFM) is a methodology that integrates vision and flexible robotic grasping.

The proposed set of hand grasping shapes presented here is based on the capabilities and mechanical constraints of the robotic hand. Pre-grasp shapes for a Barrett Hand are studied and defined using finger spread and flexion. In addition, a simple and efficient vision algorithm is used to servo the robot and to select the pre-grasp shape in the pick-and-place task of 9 different vehicle handle parts. Finally, experimental results evaluate the ability of the robotic hand to grasp both pliable and rigid parts and successfully control the UAV

GPS and inertial sensors are typically combined to estimate UAV’s state and form a navigation solution. However, in some circumstances, such as urban or lowaltitude areas, GPS signal may be very weak or even lost. Under these situations, visual data can be used as an alternative orsubstitute to GPS measurements for theformulation of a navigation solution. This section describes vision-based UA V navigation. A. Autonomous Landing Autonomous landing is a crucial capability andrequirement for UAVautonomous navigation. It gives basic idea and method for UA V autonomy. Generally, UAVs are classified into Vertical Take-Off and Landing (VTOL) UAVs and fixed-wing UAVs. As to VTOL UA V vision-based landing, Sharp et al.

Designed a landing target with simple pattern, on which comer points can be easily detected and tracked. By tracking these comers, UAV could determine its relative position to landing target using computer vision method. Details are described as follows. Given the comer points, estimating the UA V state is an optimization problem. The equation relating a point in the landing pad coordinate frame to the image of that point in the camera frame is given by Geometry ofthe coordinate frames and Euclidean motions involved in the vision-based state estimation problem Fixed -wing UA V's autonomous landing is similar to that of VTOL in theory but more complicated in practice.

Vision subsystem should recognize runway and keepstracking on it during landing. Kalman filter is introduced to keep stability of tracking.Horizon also needs detecting. According to runway and horizon, vision subsystem could estimate UAV's state , I.e. location (x,y,z), attitude (pitch, roll, yaw) etc

The deployment of UAVs has been tested in overseas conflicts. People found that one of the biggest limitations of UAVs is their limited range. To enlarge their range, UAVs are expected capable of Autonomous Aerial Refuelling (AAR). There are two ways for aerial refuelling, i.e. refuelling boom and "probe and drogue". Very similar to auto landing, vision -based method for UA V keeping pose and position to flying tanker during docking and refuelling receives great attention. AAR also needs considering some reference frames, such as UA V, tanker, camera frame etc. But AAR is much more sensitive and facing more subtle air disturbance. It requires 0.5 to 1.0 cm accuracy in the relative position.

A fixed number of visible optical markers are assumed to be available to help vision subsystem detect. However, temporary loss of visibility may occur because of hardware failures and/or physical interference. Fravolini et al. Proposed a specific docking control scheme featuring a fusion of GPS and MV distance measurements to tacklethis problem. Such studies are still under stage of simulation.

Auto flight is the extension of auto landing. Ideally, it means that UA V is capable of high level environmentunderstanding and decision making e.g. Defining its position, attitude estimation, obstacle detecting and avoidance, path planning etc. without outside instruction, guidance and intervention. Some studies related to UA V auto manoeuvring considered different conditions, including GPSsignal failure, unstructured or unknown flying zone etc. Madison et al. discussed miniature UAV's visual navigation in GPS -challenged environment, e.g. indoor.

Vision subsystem geo--locates some landmarks while GPS provides accurate navigation. Once GPS is unavailable, vision subsystem geo-locates new landmarks withpredefined landmarks. Using these landmarks, VISiOn subsystem provides information for navigation. Tests show that visionaided navigation drift is significantly lower than under inertialonly navigation. NASA Ames Research Centre runs a PrecisionAutonomous Landing Adaptive Control Experiment

In conclusion it is to be mentioned that the remote operation was successfully implemented for which a very secure IRS Data Link for communication to the robot was used. Computer vision usefully applied in UAV allowed us to understand the nature of elements on ground from a distant height. The Gesture control used to control the UAV allowed various operations to be performed using hand gestures and the corresponding motion in the UAV could be achieved. The Robot Hand Grasping technology, was very accurate in operation of the UAV as human finger-like minute details were observed. In all the operation of the UAV was successfully formulated and this may help the military greatly in their various operations.

| Are you interested in this topic.Then mail to us immediately to get the full report.

email :- contactv2@gmail.com |