Published on Feb 14, 2025

High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems. HPC has come to be applied to business uses of cluster-based supercomputers, such as data warehouses, line-of-business (LOB) applications, and transaction processing.

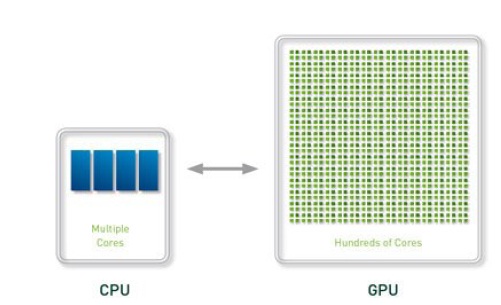

In the past few years, a new class of HPC systems has emerged. These systems employ unconventional processor architectures—such as IBM's Cell processor and graphics processing units (GPUs)—for heavy computations and use conventional central processing units (CPUs) mostly for non-compute-intensive tasks, such as I/O and communication. Prominent examples of such systems include the Los Alamos National Laboratory's Cell-based Roadrunner) and the Chinese National University of Defence Technology's ATI GPU-based Tianhe-1 cluster.

The main reason computational scientists consider using accelerators is because of the need to increase application performance to either decrease the compute time, increase the size of the science problem that they can compute, or both. The HPC space is challenging since its dominated by applications that use 64 bit floating point calculations and have frequent data reuse. As the size of conventional HPC systems increase, their space and power requirements and operational cost quickly outgrow the available resources and budgets.

Thus, metrics such as flops per machine footprint, flops per watt of power, or flops per dollar spent on the hardware and its operation are becoming increasingly important. Accelerator-based HPC systems look particularly attractive considering these metrics.

1. General purpose Graphical Processing units( GPGPUs) - a specialized microprocessor that offloads and accelerates 3D or 2D graphics rendering from the microprocessor.

2. Field Programmable Gate arrays( FPGAs)- an array of logic gates that can be hardware-programmed to fulfil user-specified tasks.

3 .Clear Speed Floating point accelerators

4. IBM Cell processors.

Accelerators are computing components containing functional units, together with memory and control systems that can be easily added to computers to speed up portions of applications. They can also be aggregated into groups for supporting acceleration of larger problem sizes.

Each accelerator being investigated has many (but not necessarily all) of the following features.

· A slow clock period compared to CPUs

· Aggregate high performance is achieved through parallelism

· Transferring data between the accelerators and CPUs is slow compared to the memory bandwidth available for the primary processors

· Needs lots of data reuse for good performance

· The fewer the bits, the better the performance

· Integer is faster than 32-bit floating-point which is faster than 64-bit floating-point

· Learning the theoretical peak is difficult

· Software tools lacking

· Requires programming in languages designed for the particular technology

The IBM Professional Graphics Controller was one of the very first 2D/3D graphics accelerators available for the IBM PC. As the processing power of GPUs has increased, so has their demand for electrical power. High performance GPUs often consume more energy than current CPUs. Another characteristic of high performance GPUs is that they require a lot of power (and hence a lot of cooling). So they’re fine for a workstation, but not for systems such as blades that are heavily constrained by cooling. However, floating-point calculations require much less power than graphics calculations.

So a GPU performing floatingpoint code might use only half the power of one doing pure graphics code. Most GPUs achieve their best performance by operating on four-tuples each of which is a 32-bit floating-point number. These four components are packed together into a 128-bit word which isoperated on as a group. So it’s like a vector of length four and similar to the SSE2 extensions on x86 processors. The ATI R580 has 48 functional units each of which can perform a 4-tuple per cycle and each of those can perform a MADD instruction. At a frequency of 650 MHz, this results in a rate of 0.65 GHz × 48 functional units × 4 per tuple × 2 flops per MADD = 250 Gflop/s.

The recent NVIDIA G80 GPU takes a different approach since it includes 32-bitfunctional units instead of 128-bit ones. Each of the 128 scalar units runs at 1.35 GHz and can perform a single 32-bit floating-point MADD operation so its theoretical peak is 1.3 GHz × 128 functional units × 2 flops per MADD = 345 Gflop/s. Unfortunately GPUs tend to have a small number of registers so measured rates are frequently less than 10% of peak. GPUs do have veryrobust memory systems that are faster (but smaller) than that of CPUs. Maximum memory per GPU is about 1 GB and this memory bandwidth may exceed 40 GB/s. Today, parallel GPUs have begun making computational inroads against the CPU, and a subfield of research, dubbed GPU Computing or GPGPU for General Purpose Computing on GPU, has found its way into fields as diverse as oil exploration, scientific image processing, linear algebra[4], 3D reconstruction and even stock options pricing determination. Nvidia's CUDA platform is the most widely adopted programming model for GPU computing, with OpenCL also being offered as an open standard.

The GPUs of the most powerful class typically interface with the motherboard by means of an expansion slot such as PCI Express (PCIe) or Accelerated Graphics Port (AGP) and can usually be replaced or upgraded with relative ease, assuming the motherboard is capable of supporting the upgrade. A few graphics cards still use Peripheral Component Interconnect (PCI) slots, but their bandwidth is so limited that they are generally used only when a PCIe or AGP slot is not available. A dedicated GPU is not necessarily removable, nor does it necessarily interface with the motherboard in a standard fashion.

The term "dedicated" refers to the fact that dedicated graphics cards have RAM that is dedicated to the card's use, not to the fact that most dedicated GPUs are removable. Dedicated GPUs for portable computers are most commonly interfaced through a non-standard and often proprietary slot due to size and weight constraints. Such ports may still be considered PCIe or AGP in terms of their logical host interface, even if they are not physically interchangeable with their counterparts. Technologies such as SLI by NVIDIA and CrossFire by ATI allow multiple GPUs to be used to draw a single image, increasing the processing power available for graphics.

Integrated graphics solutions, shared graphics solutions, or Integrated graphics processors (IGP) utilize a portion of a computer's system RAM rather than dedicated graphics memory. Computers with integrated graphics account for 90% of all PC shipments. These solutions are less costly to implement than dedicated graphics solutions, but are less capable. Historically, integrated solutions were often considered unfit to play 3D games or run graphically intensive programs but could run less intensive programs such as Adobe Flash. Examples of such IGPs would be offerings from SiS and VIA circa 2004. However, today's integrated solutions such as AMD's Radeon HD 3200 (AMD 780G chipset) and NVIDIA's GeForce 8200 (nForce 710|NVIDIA nForce 730a) are more than capable of handling 2D graphics from Adobe Flash or low stress 3D graphics. However, most integrated graphics still struggle with high-end video games.

Chips like the Nvidia GeForce 9400M in Apple's MacBook and MacBook Pro and AMD's Radeon HD 3300 (AMD 790GX) have an improved performance, but still lag behind dedicated graphics cards. Modern desktop motherboards often include an integrated graphics solution and have expansion slots available to add a dedicated graphics card later. As a GPU is extremely memory intensive, an integrated solution may find itself competing for the already relatively slow system RAM with the CPU, as it has minimal or no dedicated video memory. System RAM may be 2 Gbit/s to 12.8 Gbit/s, yet dedicated GPUs enjoy between 10 Gbit/s to over 100 Gbit/s of bandwidth depending on the model. Older integrated graphics chipsets lacked hardware transform and lighting, but newer ones include it.

This newer class of GPUs competes with integrated graphics in the low-end desktop and notebook markets. The most common implementations of this are ATI's HyperMemory and NVIDIA's TurboCache. Hybrid graphics cards are somewhat more expensive than integrated graphics, but much less expensive than dedicated graphics cards. These share memory with the system and have a small dedicated memory cache, to make up for the high latency of the system RAM. Technologies within PCI Express can make this possible. While these solutions are sometimes advertised as having as much as 768MB of RAM, this refers to how much can be shared with the system memory.

A new concept is to use a general purpose graphics processing unit as a modified form of stream processor. This concept turns the massive floating-point computational power of a modern graphics accelerator's shader pipeline into general-purpose computing power, as opposed to being hard wired solely to do graphical operations. In certain applications requiring massive vector operations, this can yield several orders of magnitude higher performance than a conventional CPU. The two largest discrete (GPU designers, ATI and NVIDIA, are beginning to pursue this new approach with an array of applications. Both nVidia and ATI have teamed with Stanford University to create a GPU-based client for the Folding@Home distributed computing project, for protein folding calculations. In certain circumstances the GPU calculates forty times faster than the conventional CPUs traditionally used by such applications.

Recently NVidia began releasing cards supporting an API extension to the C programming language CUDA ("Compute Unified Device Architecture"), which allows specified functions from a normal C program to run on the GPU's stream processors. This makes C programs capable of taking advantage of a GPU's ability to operate on large matrices in parallel, while still making use of the CPU when appropriate. CUDA is also the first API to allow CPU-based applications to access directly the resources of a GPU for more general purpose computing without the limitations of using a graphics API. Since 2005 there has been interest in using the performance offered by GPUs for evolutionary computation in general, and for accelerating the fitness evaluation in genetic programming in particular.

Most approaches compile linear or tree programs on the host PC and transfer the executable to the GPU to be run. Typically the performance advantage is only obtained by running the single active program simultaneously on many example problems in parallel, using the GPU's SIMD architecture. However, substantial acceleration can also be obtained by not compiling the programs, and instead transferring them to the GPU, to be interpreted there. Acceleration can then be obtained by either interpreting multiple programs simultaneously, simultaneously running multiple example problems, or combinations of both. A modern GPU (e.g. 8800 GTX or later) can readily simultaneously interpret hundreds of thousands of very small programs.

There are multiple families of accelerators suitable for executing applications from portions of HPC space. These include GPGPUs, FPGAs, ClearSpeed and the Cell processor. Each type is good for specific types of applications, but they all need applications with a high calculation to memory reference ratio.

They are best at the following:

· GPGPUs: graphics, 32-bit floating-point

· FPGAs: embedded applications, applications that require a small number of bits

· Clearspeed: matrix-matrix multiplication, 64-bit floating-point

· Cell: graphics, 32-bit floating-point

Common traits for accelerators today include slow clock frequencies, performance is through parallelism, low bandwidth connections to CPU, and the lack of standard software tools.

1 https://www.computer.org/portal/web/csdl/magazines/cise#4

2 https://www.hp.com/techservers/hpccn/hpccollaboration/ADCatalyst/downloads/accel erators.pdf

3 https://en.wikipedia.org/wiki/Graphics_processing_unit

4 https://www.scientificcomputing.com/HPC-Future.aspx

5 https://www.nvidia.com/object/fermi_architecture.html

6 https://www.xilinx.com/support/documentation/white_papers/wp375_HPC_Using_FP GAs.pdf

7 https://www.top500.org/lists/2010/06

| Are you interested in this topic.Then mail to us immediately to get the full report.

email :- contactv2@gmail.com |